Tiny Machine Learning (TinyML) is a giant opportunity in the fast growing field of machine learning. It enables resource-constrained devices to perform on-device inferences at the edge of the network. These devices require the use of specialized software such as TensorFlow Lite, an open-source deep learning framework, to run optimized deep learning algorithms. The main objectives of TinyML are:

- Small, low-cost devices operate at extremely low power (1 mW), allowing batteries or energy harvesting to be used for extended periods of time.

- Model optimization for resource-constrained devices (CPU, Memory, Storage)

- Tap into the data that isn’t being processed at the edge (> 99%) on millions of devices that are already available.

Next, we are going to go deeper into the subject, starting with its importance and finishing with its challenges.

Getting Started with Machine Learning

Starting this subject isn’t easy. There is a lot of math involved in the algorithms that drive the process of a machine simply classifying an image.

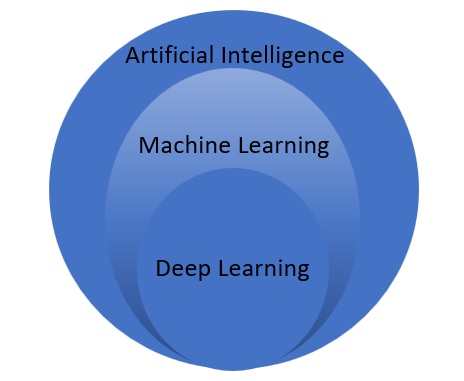

Well, we can just start with the basics. Machine Learning is a subset of Artificial Intelligence focused on developing algorithms that learn to solve complex problems by searching for patterns in data. Deep learning is a type of Machine Learning that combines Neural Networks with large amounts of data (Big Data).

Deep Learning: A technique or techniques that teaches computers to perform human-level tasks such as face recognition, virtual assistants, and many others.

Big Data: “Big data” is defined as larger, more complex data sets, particularly from new data sources that are growing at an exponential rate. Examples include social media, stock exchanges, etc.

Without overcomplicating the subject, here are some of the subclasses of machine learning focused on the more practical aspects instead of giving you the boring big names and formulas (we will get there eventually):

- Image classification: It is the process of categorizing and labeling groups of pixels or vectors within an image.

- Object detection: It is a machine’s ability to identify and locate one or more targets in an image or video data.

- Segmentation (Autonomous Cars): Colorizing/Pixilizing the entire image into different categories like cars, people, etc. The separation of the various components of an image

- Machine translation: Conversion of voice commands into other forms like text, activation of an output like a relay, etc.

- Systems for recommending products or users: They generally deal with the ranking of ratings. They are used by developers to predict the user’s choices and offer relevant suggestions to users (YouTube, Facebook, etc.).

The general way of processing data at the edge is to use sensors and send the information to the big data centers where it can be analysed. With the help of specialized processors like GPUs or TPUs that are good for machine learning applications, lots of data can be analyzed for patterns. This involves data centers with a lot of computational power but very power-hungry. Some of them have to be built near a place where the processors can be cooled, like the Google Data Center in the Netherlands.

It can become a problem when we need to rapidly analyze the patterns in sensor data and make a decision. This being said, the big data centers aren’t well suited for this kind of operation. The following table explains the pros and cons:

| Pros | Cons |

|---|---|

| Computational power | High power consumption |

| High data (Bandwidth) | Big Corporation (Privacy & Hackers) |

| No interactivity with the end user | |

| High Latency (Far way from the edge) | |

Arriving at this point, we may be asking, what is the point of using Tiny devices at the edge of the network? Well, I will answer the best I can.

Why We Need TinyML?

The traditional analysis of large data sets is not very efficient. We have the big data centers with their big computers sucking up all that energy they want without any regard for the planet. The Machine Learning models are trained and optimized without taking into account any constraints on Storage, Memory, or Processing Power.

At the edge of the network, we have tiny devices that can’t take all this heat. A couple of years ago, TinyML book authors Pete Warden and Daniel Situnayake found a way to mitigate the problem by introducing a lean version of a well-known firmware called TensorFlow Lite Micro that makes changes to the Machine Learning model and converts it to run on a small microcontroller with all its constraints.

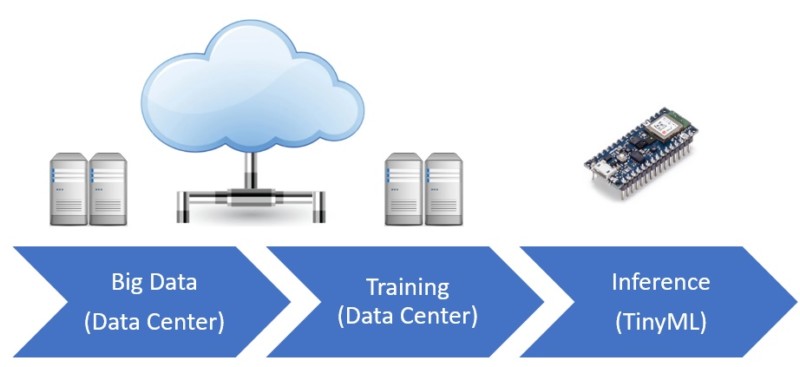

The goal is to train the model in the big data centers and then convert it to run on tiny devices on the edge. Then, instead of sending the data to be processed in the cloud like with the IOT, we can now analyse it in situ and make decisions based on the information that the sensors are feeding the microcontroller.

Millions of small microcontrollers are already available for us to retrieve data. The applications range from agriculture to health, and the possibilities are endless. It is known that only about a portion of data (1%) is being analysed by devices like smartphones, smart watches, etc.

Having said that, adjusting to our new reality should be simple, but it is not.There are still challenges that have to be overcome in order to access, analyse, and draw conclusions (inferences) from all the data that is being generated every second. This could be the beginning of a major interaction between machines and humans, hopefully for the better.

How TinyML Work?

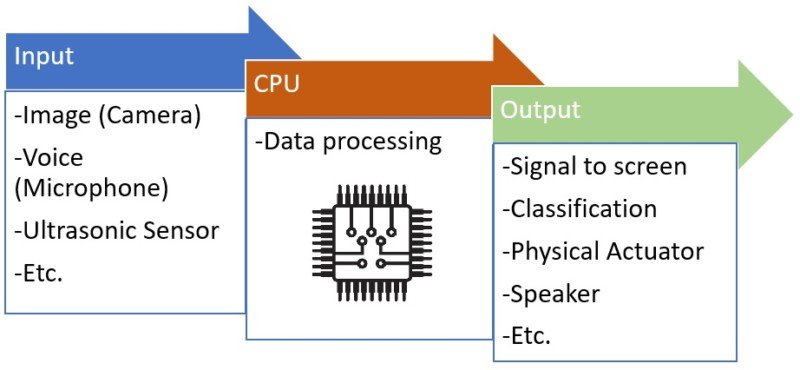

In a simple way, we have a sensor that receives data from the environment and a processor that tries to find patterns in all the noise. If the processor finds a valid pattern in the data, it activates an output.

The principal is the same for a generic Arduino Uno. What changes is that instead of a system that is determinisc, we have a system that can “fail”. In other words, it is probabilist in nature and this can be a problem. When we press an emergency button, it has to always deliver the same outcome. There are lives at stake. In this case, if an AI system can give a 99% probability of success, it isn’t enough for now.

Another aspect that is important to underline is the distinction between Training and Inference. TinyML devices do not have the resources to train the Neural Networks; they only run lightweight versions of the final trained models that were trained in the big data center or locally on a powerful computer. This is explained better in the following section.

Link to the original version with better quality.

TinyML Model Optimization

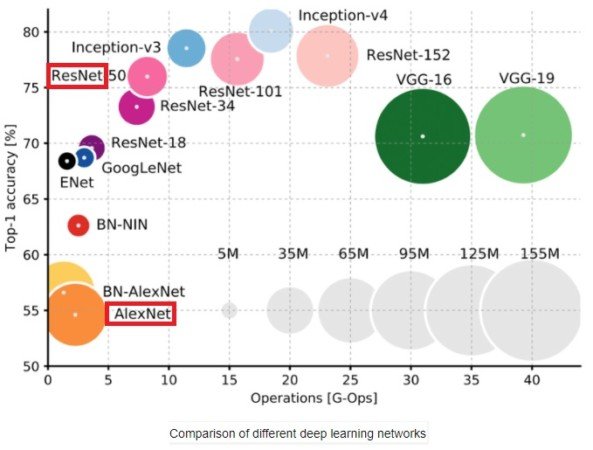

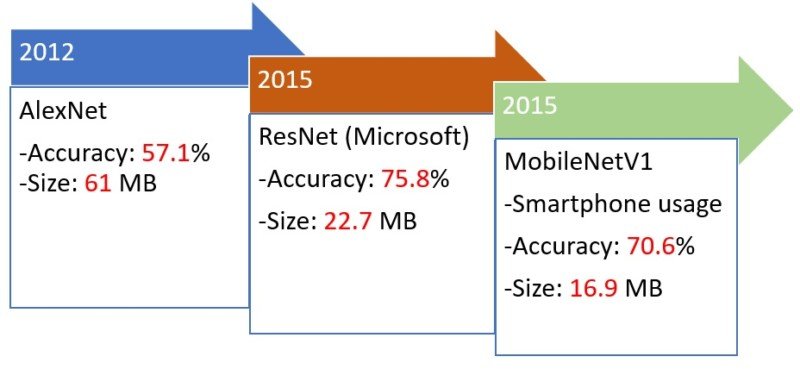

The Machine Learning model has increased in size and complexity since 2012, when Alexnet was deployed. AlexNet was primarily designed by Alex Krizhevsky and it is a Convolutional Neural Network (CNN). After competing in the ImageNet Large Scale Visual Recognition Challenge, it was the first CNN to win.

The challenge was for AlexNet to try to predict a thousand classes from the ImageNet data set. With an accuracy of 57.1%, it wasn’t that impressive. A couple years later, with the arrival of MobileNetV1 and the population’s adoption of smartphones, the focus changed. In order for models to work on a smartphone, that had to be smaller. A compromise had to be made, with the accuracy suffering as the size of the model was reduced.

Even with a size of 16 MB, we can´t simply transfer the MobileNet model to a tiny device like an Arduino Nano 33 BLE Sense. It only has 256KB of RAM.

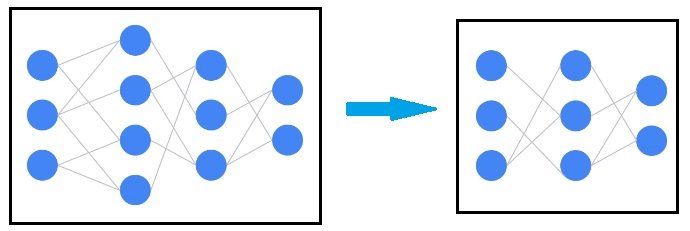

Now that we know that, for now, the objective of tinyML is to make Inferences at the edge of the network, it is time to address the elephant (literally) in the room. How is it that we can fit a trained model into a tiny device? Well, removing all that isn’t essential from the Neural Network. Here are several model compression techniques:

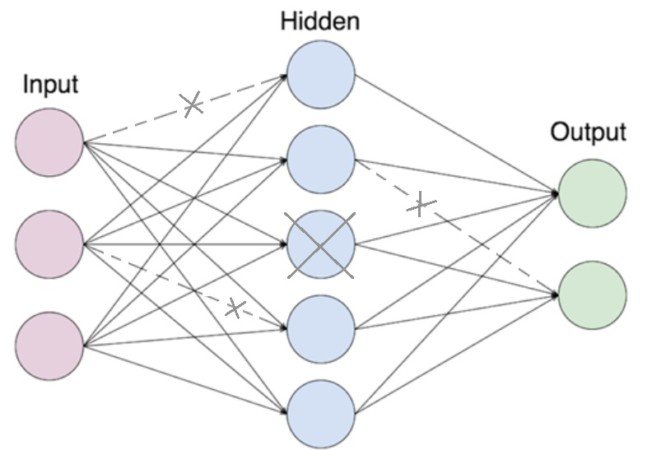

- Pruning: The idea is to remove some of the connections within the network or even some neurons, making sure that we don’t lose too much accuracy in the process. This reduces the computing power needed and the size of the model.

- Quantization: Basicly, instead of using floating point algebra, we use integer algebra (Int8), reducing the number of bytes needed. This reduces the model size by 4x, and this is important because tiny devices don’t have a lot of memory.

- Knowledge Distillation: Big Word to see that by doing this procedure, we ultimately reduce the size of the network, staying with a simplified version of the original.

All of this is done with the help of the Tensorflow Lite Micro firmware. It takes the model that was trained on a more powerful machine and compresses it to size without losing too many key characteristics, like accuracy, etc., in order to be downloaded to a tiny device like an Arduino.

The Benefits And Challenges Of TinyML

At the end of this journey, I am going to summarize the good and the bad of running Machine Learning on Tiny devices at the edge of a network.

| Benefits | Challenges |

|---|---|

| Energy Efficiency: - Battery Powered Devices - Energy Harvesting | Device Heterogeneity: -The need for normalization of tiny devices' hardware and firmware |

| Data security & System Reliability: -Better protection against cyber-attacks -Transmition errors are minimized | MCU constraints: -CPU -Memory -Storage -Etc. |

| Latency: -Reduced delay in data transmission (Local vs Cloud) | |

| -Low Cost | |

References

HarvardX’s Tiny Machine Learning (TinyML)

https://blogs.gartner.com/paul-debeasi/files/2019/01/Train-versus-Inference.png

https://en.wikipedia.org/wiki/AlexNet

https://en.wikipedia.org/wiki/ImageNet